🙂 Daily Quordle 984

6️⃣8️⃣

4️⃣3️⃣

m-w.com/games/quordle/

⬜🟩⬜🟨🟨 ⬜🟨🟩⬜⬜

⬜⬜⬜🟨⬜ 🟨🟨⬜⬜⬜

⬜🟨⬜⬜⬜ 🟨⬜⬜🟨⬜

⬜⬜⬜🟩⬜ ⬜🟨⬜🟩⬜

🟩🟩⬜🟩🟩 ⬜🟨⬜🟩⬜

🟩🟩🟩🟩🟩 ⬜🟨⬜🟩⬜

⬛⬛⬛⬛⬛ ⬜🟩🟩🟩🟩

⬛⬛⬛⬛⬛ 🟩🟩🟩🟩🟩

🟩⬜🟨⬜⬜ 🟨⬜🟨⬜🟨

⬜🟩🟨⬜⬜ 🟩🟨🟨🟨⬜

⬜⬜⬜🟨🟨 🟩🟩🟩🟩🟩

🟩🟩🟩🟩🟩 ⬛⬛⬛⬛⬛

- 0 Posts

- 68 Comments

Tightrope, a daily trivia game | Britannica Oct. 4, 2024 T I G H T R O P E ✅ ✅ ✅ ✅ ✅ ✅ ✅ 💔 💔 🎉 My Score: 1880

Connections Puzzle #481 🟨🟨🟨🟨 🟩🟩🟪🟪 🟪🟩🟩🟦 🟩🟩🟩🟩 🟦🟦🟦🟦 🟪🟪🟪🟪

Wordle 1,203 4/6 ⬛🟨⬛⬛🟩 ⬛⬛🟨⬛🟩 🟨🟩⬛⬛🟩 🟩🟩🟩🟩🟩

Strands #215 “Driver's catch-all” 🟡🔵🔵🔵 🔵🔵🔵

6·4 days ago

6·4 days agoOh, I thought he was going hard on the trolling, implying the bug was that the ads are broken when offline.

Blossom Puzzle, September 30 Letters: C E I T N O S My score: 313 points My longest word: 11 letters 💮 🌼 🏵 🌷 💐 🌺 🌸 🌹 🌻 💮 🌼

Sep. 30, 2024

T I G H T R O P E 💔 💔 ✅ ✅ ✅ ✅ ✅ ✅ 💔 🤕

My Score: 1250

Thought I was about to clinch an amazing comeback.

🙂 Daily Quordle 979 7️⃣9️⃣ 3️⃣8️⃣ m-w.com/games/quordle/ ⬜⬜⬜⬜🟨 ⬜⬜⬜🟩🟩 ⬜⬜🟨⬜🟨 ⬜⬜⬜🟩🟩 ⬜🟨🟨⬜🟨 ⬜🟨⬜⬜🟩 🟨🟨⬜🟨🟨 🟨⬜⬜🟨⬜ 🟨⬜🟨🟨🟨 ⬜⬜🟨🟨⬜ 🟩🟩🟩🟩⬜ ⬜🟨🟩⬜🟨 🟩🟩🟩🟩🟩 ⬜🟨🟩⬜⬜ ⬛⬛⬛⬛⬛ ⬜⬜🟨⬜⬜ ⬛⬛⬛⬛⬛ 🟩🟩🟩🟩🟩

⬜⬜⬜⬜🟩 ⬜🟩⬜⬜🟨 🟩⬜🟩⬜🟩 ⬜⬜⬜⬜🟨 🟩🟩🟩🟩🟩 ⬜⬜⬜⬜🟨 ⬛⬛⬛⬛⬛ ⬜⬜⬜🟩🟨 ⬛⬛⬛⬛⬛ ⬜⬜🟩⬜🟨 ⬛⬛⬛⬛⬛ 🟩🟨⬜⬜⬜ ⬛⬛⬛⬛⬛ 🟩🟨⬜⬜⬜ ⬛⬛⬛⬛⬛ 🟩🟩🟩🟩🟩

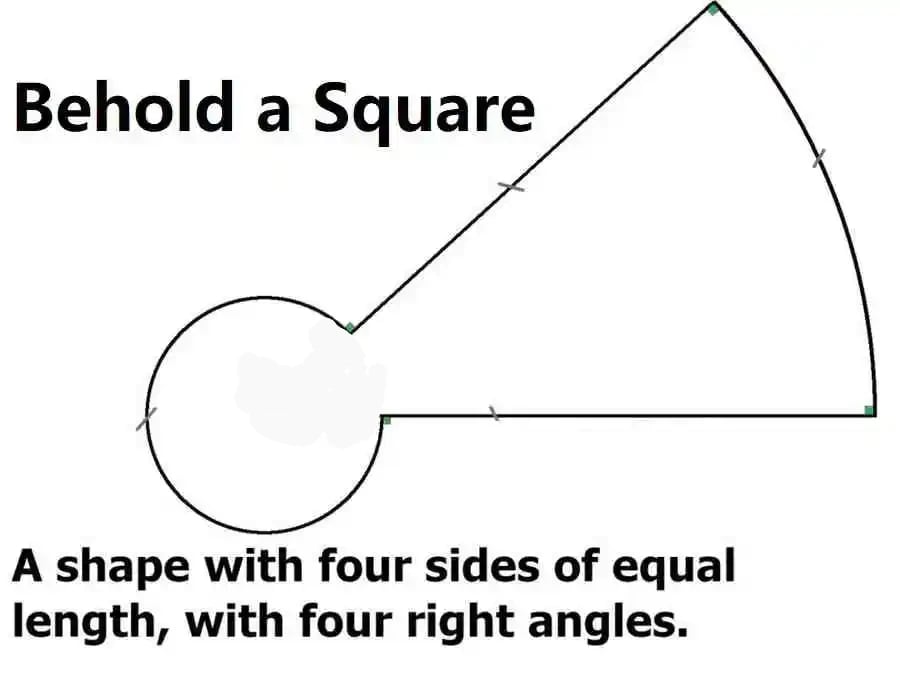

The part showing the angle is not part of it. They should’ve made it a dotted line or something.

Though, two of the 90° angles are.actually 270.

I was born in the dark ages. Before 2014, we used to think the world was flat and didn’t think about climate change.

5·22 days ago

5·22 days agoAye. I’m still on my S20+. Need a replacement battery, but otherwise nothing new is worth the cost yet. Pixels be the same Pixels, Samsungs, the same Samsungs. Where the upgrades are happening, I just won’t see them, so nothing “new”.

It’s like being subscribed to a toddler in the “why” phase.

When having a beer, I’ve always found it funny that one of the few imperial measurements metric nations kept around, the pint, America went and invented their own. Uncharacteristically a smaller version too.

Nah. It starts out like THUD! THUD! and then slowly after a couple minutes of warming up, that goes all muffled and it becomes that familiar high-pitched ringing noise.

12·28 days ago

12·28 days agoYou just made me realise I’m a gamer, not a Fortniter. But I probably should’ve realised that based on my Steam "years of service* and disgustingly large catalogue.

I’m a proven guaranteed money pot, publishers! Make me something good and I give the moneys!

3·1 month ago

3·1 month agoAnd no insects!

1·1 month ago

1·1 month agoI think it was the puzzles and lack of guidance. Not really knowing if I’m in the right place doing the right thing. Maybe I’ll try again with a bit of a guide until it hooks in and I get it.

3·1 month ago

3·1 month agoI tried, I really did. But a few hours in, I just didn’t like the gameplay even though I thought I would’ve loved it and the other new games I had waiting won.

Maybe I should grind through. Is there a point where it suddenly gets good a few hours in? Or is it just not for me, despite everything on the book’s cover?

Blossom Puzzle, October 4 Letters: C G I A M N P My score: 289 points My longest word: 9 letters 🌸 🌹 💮 💐 🌻 🏵 🌷 🌺 🌼