Cat’s Cradle and Anathem are among my absolute favorites.

Cat’s Cradle and Anathem are among my absolute favorites.

No, it’s “biologically.”

Huh. Grandpa Simpson was right. It did happen to me too.

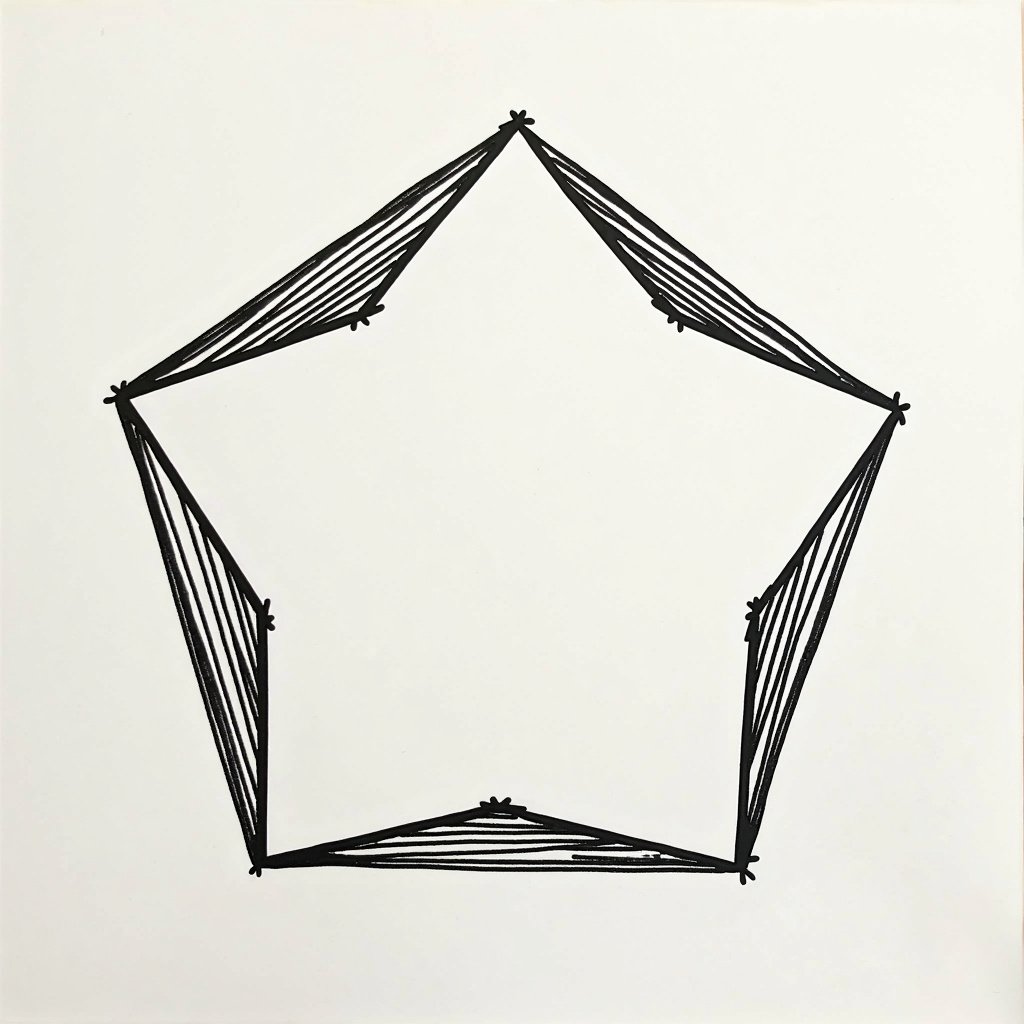

I don’t really know, but I think it’s mostly to do with pentagons being under-represented in the world in general. That and the specific way that a pentagon breaks symmetry. But it’s not completely impossible to get em to make one. After a lot of futzing around, o1 wrote this prompt, which seems to work 50% of the time with FLUX [pro]:

An illustration of a regular pentagon shape: a flat, two-dimensional geometric figure with five equal straight sides and five equal angles, drawn with black lines on a white background, centered in the image.

Fun Fact: It is very difficult to get any of the image generators to make a pentagon.

I think it was Perplexity. Moved to using Flux since that cuddly monstrosity.

Thank you. For as much as this post comes up, I hope people are at least getting an education.

It’s an old game, but Pixel Junk: Monsters is a ton of fun as a co-op tower defense. Don’t bother with the sequel - s’bad.

As a chaote, it honestly didn’t register as satire. But if it is, it’s spot on.

This type of segmentation is of declining practical value. Modern AI implementations are usually hybrids of several categories of constructed intelligence.

Fair weather friends to AI crack me up.

Ironically, if they’d used an LLM, it would have corrected their writing.

Just looking at the mental space of the three fanboy types… Mac seems the chilliest to hang with.

e: lol, case in point

I like to think about the spacefaring AI (or cyborgs, if we’re lucky) that will inevitably do this stuff in our stead, assuming we don’t strangle them in the cradle.

What makes the “spicy autocomplete” perspective incomplete is also what makes LLMs work. The “Attention is All You Need” paper that introduced attention transformers describes a type of self-awareness necessary to predict the next word. In the process of writing the next word of an essay, it navigates a 22,000-dimensional semantic space, And the similarity to the way humans experience language is more than philosophical - the advancements in LLMs have sparked a bunch of new research in neurology.

Not OP, but speaking from a fairly deep layman understanding of how LLMs work - all anyone really knows is that capabilities of fundamentally higher orders (like deception, which requires theory of mind) emerged by simply training larger networks. Since we don’t have a great understanding of how our own intelligence emerges from our wetware, we’re only guessing.

I was just being a smartass, but I appreciate your commitment to clear communication.